Backend Guides

Using the Hosted Backend

Using the Layercode Hosted Backend to power your voice agent.

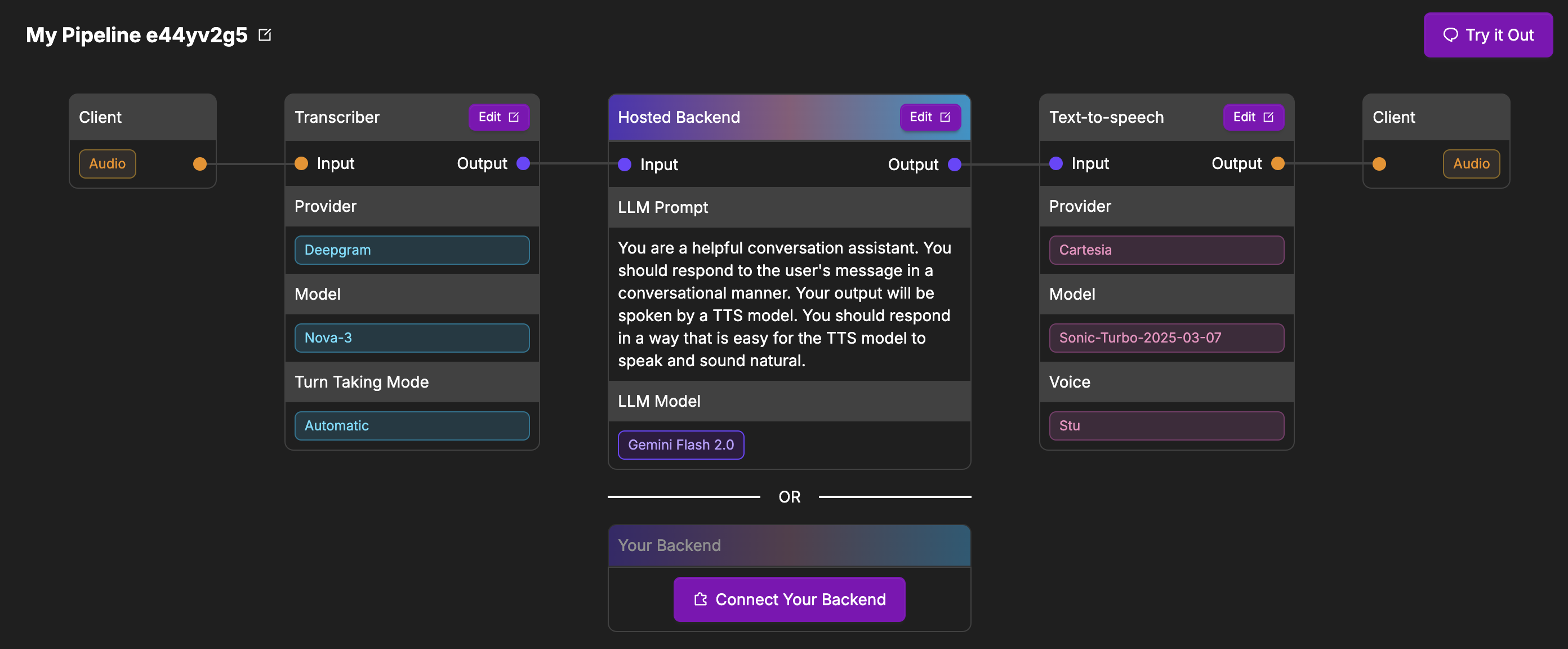

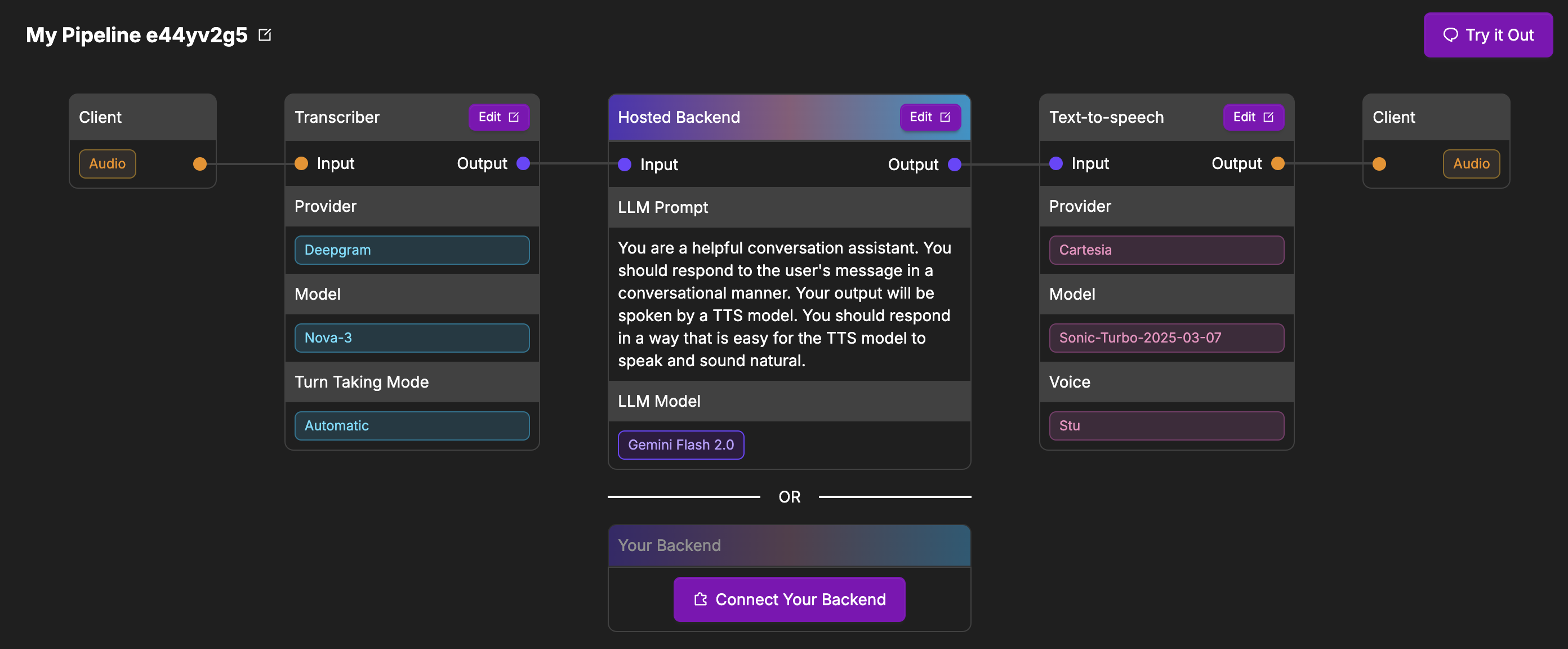

Layercode offers a hosted backend option that makes it easy to build and deploy voice agents without writing the agent backend yourself. In this mode, Layercode handles the backend logic for you: when a user speaks, Layercode sends their query to an LLM (Large Language Model) using a customizable prompt, then streams the AI-generated response back to the user as speech.

Features

- Responses are generated using the best low-latency LLM available (currently Gemini Flash 2.0)

- Conversation history is stored in Layercode’s cloud

- You can customize the prompt to change the behavior of the voice agent

- Integrate with your web or mobile frontend, or connect to inbound or outbound phone calls

- Still have complete control over the transcription, turn taking and text-to-speech voice pipeline settings

We’re actively working to expand the hosted backend’s capabilities. Stay tuned for upcoming features, including MCP tools.